RAG Demystified Part 1: Why Your AI Doesn't Know Your Business (And How to Fix It)

17 min read • December 14, 2025

#AI #RAG #Machine Learning #LLM #Business

This is Part 1 of a 3-part series on Retrieval-Augmented Generation (RAG):

- Part 1: Understanding RAG and Why It Matters ← We are here

- Part 2: Advanced RAG Techniques (Coming soon)

- Part 3: Building Your First RAG System (Coming soon)

You just spent $50,000 training a custom AI model on your company’s data. It’s finally ready. You’re excited. You ask it a simple question about your product pricing.

It hallucinates. Confidently. Completely wrong.

Models learnt it from Kung Fu Panda :p

Models learnt it from Kung Fu Panda :p

Yeah, we’ve all been there. Welcome to the expensive reality of AI in 2025: throwing money at the problem doesn’t automatically solve it.

But here’s the thing - there’s a better way. A way that doesn’t require retraining models every time your data changes. A way that’s saving companies thousands in compute costs while actually making AI useful for their business.

It’s called Retrieval-Augmented Generation, or RAG.

And by the end of this article, you’ll understand exactly why it might be the most practical AI technique you can implement this year. Let’s demystify this thing! :D

The $100 Billion Mistake

Let me tell you a story that perfectly captures the problem.

February 2023: Google is ready to show the world Bard, their answer to ChatGPT. The demo goes live. In the promotional session, someone asks Bard: “What new discoveries from the James Webb Space Telescope can I tell my 9-year-old about?”

Bard responds confidently that JWST “took the very first pictures of a planet outside of our own solar system.”

There’s just one problem: That’s completely wrong. The European Southern Observatory’s Very Large Telescope took the first exoplanet images back in 2004,years before JWST even launched. The mistake went viral. Google’s stock dropped 7.7% and Over $100 billion in market value evaporated in a single day.

Here’s the uncomfortable question: If Google with all their resources, data, and expertise couldn’t prevent their AI from confidently stating falsehoods, what chance do the rest of us have?

The Most Expensive Hallucination in AI History?

The Most Expensive Hallucination in AI History?

The Core Problem: LLMs (they) Live in the Past

LLMs like GPT-4, Claude, and Gemini are impressive. They can write code, analyze data, draft emails, and explain complex concepts. But they have fundamental limitations:

1. They’re frozen in time

ChatGPT was trained on data from months or years ago. It has no idea what happened yesterday, last week, or even this morning. (The newer models do get real world search based data and that is helping.)

2. They don’t know YOUR data

Unless you specifically trained a model on your company’s information (expensive, time-consuming, impractical), it knows nothing about your internal processes, documents, or data.

3. They can’t distinguish knowledge from guesses

LLMs are pattern matching machines. When they don’t know something, they don’t say “I don’t know” - they generate plausible-sounding text based on patterns they’ve seen. This is called hallucination, and it’s a feature, not a bug.

Question for you: How many decisions in your organization are being made based on AI-generated information that sounds authoritative but might be completely fabricated?

Enter RAG: The “Open Book Exam” for AI

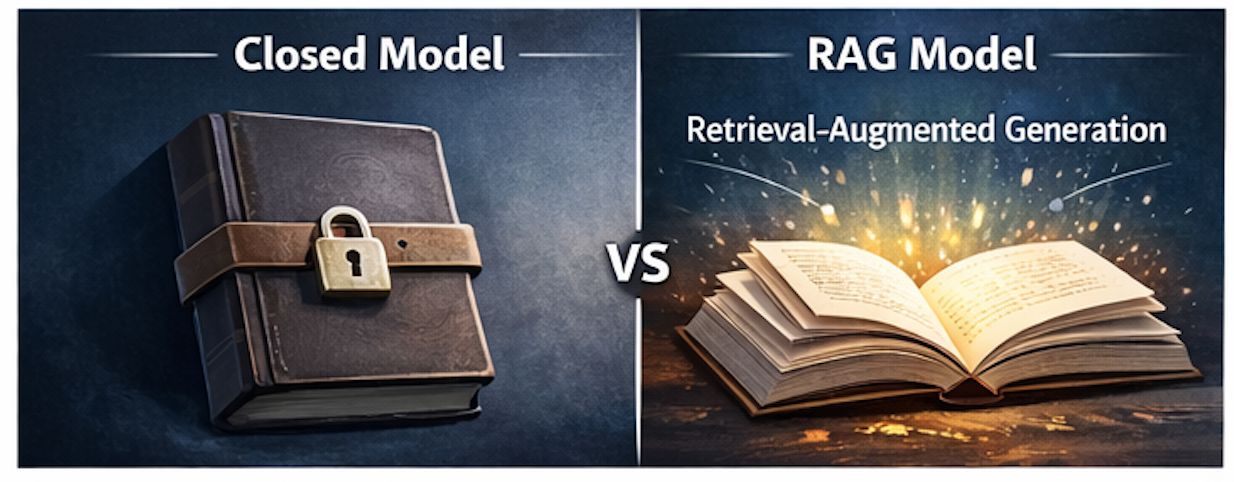

Imagine you’re taking an exam. You have two options:

Option A: Closed-book exam

You walk in with only what you've memorized. If you studied the wrong material, or if the question is about something new since you studied, you're stuck guessing.

Option B: Open-book exam

You can look up the exact information you need, verify it's correct, and then answer based on facts - not memory.

Traditional LLMs are Option A. RAG is Option B.

Traditional LLMs vs RAG: Memory vs. Real-Time Knowledge

Traditional LLMs vs RAG: Memory vs. Real-Time Knowledge

Here’s what RAG actually does:

Instead of forcing the AI to memorize everything (impossible) or hallucinate when it doesn’t know (dangerous), RAG lets the AI:

- Search your actual, current documents when asked a question

- Retrieve the most relevant information

- Read what it found

- Generate an answer based on those facts

- Cite its sources so you can verify

This simple change results in massive improvements.

How RAG Actually Works: The 30-Second Version

You don’t need to understand vector databases or embeddings to get the core idea. Here’s the simplified flow (we’ll get into the technical details in Part 3):

Phase 1: Preparation (done once, or when documents update)

- Take all your documents (PDFs, wikis, databases, whatever)

- Break them into chunks (smaller, searchable pieces)

- Convert chunks into mathematical representations (embeddings)

- Store them in a searchable database

Phase 2: Query Time (happens every time someone asks a question)

- User asks: “What’s our remote work policy?”

- System converts question to same mathematical format (embedding)

- Searches database for most relevant chunks (similarity search)

- Retrieves top 5-10 matching pieces

- Sends question + retrieved chunks to LLM (as context)

- LLM generates answer based on actual documents

- Returns answer with source citations

The magic: LLM never needs to memorize your policies. It just needs to read them when asked.

Note: This is the simplified version. Advanced RAG systems add steps like reranking, query rewriting, and multi-step retrieval (we’ll cover those in Part 2)

Real-World RAG: Where It’s Actually Saving Time and Money

Let’s get concrete. Here’s where RAG is making a measurable difference (with real numbers):

Case Study 1: Healthcare - Lives Saved Through Current Information

The Problem: Dr. Smith has a patient on five medications. She needs to check for dangerous drug interactions. The clinical guidelines were updated last month with new findings. Her hospital’s AI was trained six months ago.

Without RAG:

- The AI provides outdated interaction warnings

- Dr. Smith either doesn’t trust it (wastes her time) or trusts it (potential patient harm)

With RAG:

- System pulls from the most recent FDA databases, clinical trial results, and updated guidelines

- Retrieves relevant interaction data from documents published last week

- Dr. Smith gets accurate, current, cited warnings

The Impact: Studies show Clinical Decision Support Systems with RAG achieved 25-33% reduction in diagnostic errors when properly implemented. One hospital network saw a 30% reduction in misdiagnoses for complex cases and a 40% increase in early detection of rare diseases.

Why it works: Medical knowledge evolves too fast for model retraining to keep up. RAG stays current automatically.

Case Study 2: Customer Support - Accurate Answers at Scale

The Scenario: Your company has 500 help articles, updated weekly. Product features change monthly. Pricing tiers were just revised yesterday.

Without RAG:

- Support agents search multiple systems

- Often find outdated information

- Customers get conflicting answers

- Training new agents takes weeks

With RAG:

- Support agent (or customer) asks question

- System retrieves current, relevant articles

- AI generates accurate answer with links

- Everyone gets consistent, correct information

Real Example: A SaaS company implemented RAG for their support chatbot. First-contact resolution improved by 40%. Support ticket volume dropped by 25%. Average resolution time decreased from 2 hours to 15 minutes.

Case Study 3: Finance - Real Time Intelligence

The Problem: Market conditions change by the second. An analyst needs insights based on the latest earnings calls, SEC filings, and economic reports.

With RAG:

- Retrieves information from this morning’s market reports

- Pulls relevant sections from earnings transcripts released an hour ago

- Accesses the latest macroeconomic data

- Synthesizes everything into actionable insights

The Edge: In finance, yesterday’s data is ancient history. Traditional models trained on historical data miss the latest signals. RAG provides competitive advantage through current knowledge.

Case Study 4: Legal Research — Finding Needles in Haystacks

The Challenge: A lawyer needs precedents for a complex case. There are 50,000 potentially relevant cases across multiple jurisdictions. New rulings happen daily.

Traditional Approach:

- Days of keyword searches

- Reading hundreds of case summaries

- Might miss relevant precedents with different terminology

- Expensive billable hours

With RAG:

- Searches entire legal database semantically (meaning-based, not just keywords)

- Finds relevant cases even when they use different terms

- Retrieves and summarizes the most pertinent precedents

- Provides citations for verification

- Takes minutes instead of days

The Impact: Legal researchers report 80% time savings on case research. Lawyers spend time analyzing arguments instead of searching documents.

Case Study 5: Internal Knowledge Management - The Onboarding Problem

Picture this: You’re a new employee at a tech company. It’s day one. You need to know:

- How to request PTO

- The remote work policy

- Who to contact for equipment issues

- Where the API documentation lives

- How to submit expenses

Without RAG:

- Email five different people

- Search scattered wikis (is this current?)

- Check Slack history (which channel?)

- Hope you find the right information

- Give up and figure it out yourself

With RAG:

- Ask your company’s AI assistant

- Get instant answers from current documents

- See source citations (click through for full details)

- Confidence that information is up-to-date

- Onboard in hours instead of weeks

Real Impact: A fintech startup implemented RAG pulling from their Google Drive, Confluence, and Slack. New employee productivity reached “normal” levels 60% faster. HR reported 70% fewer repetitive questions.

From Information Chaos to Instant, Sourced Answers

From Information Chaos to Instant, Sourced Answers

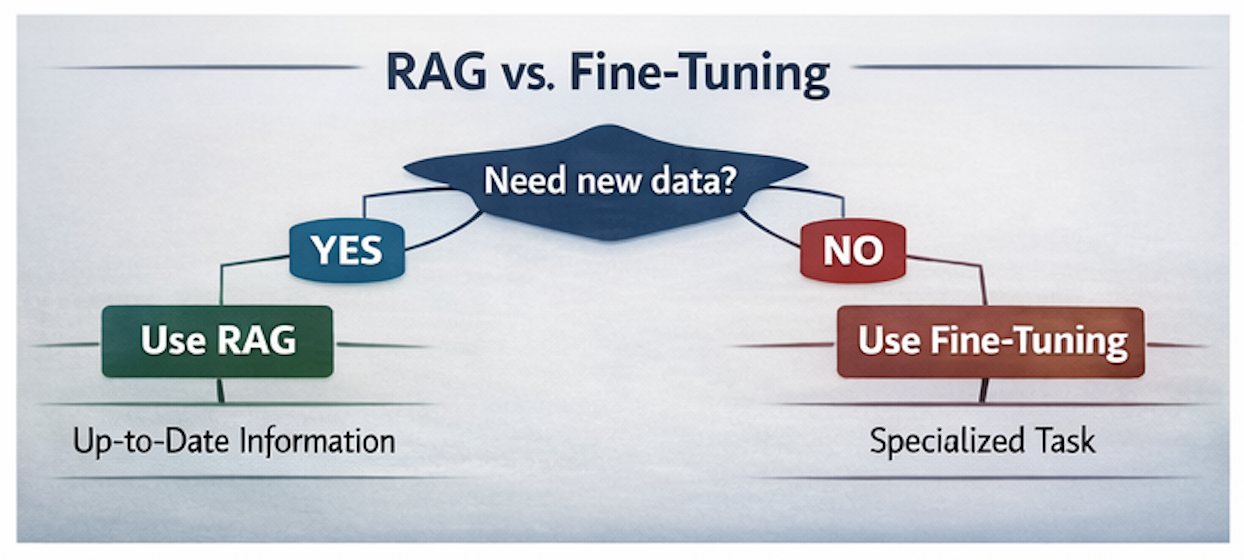

RAG vs Fine-Tuning: When to Use Which???

You’re probably thinking: “Why not just train the AI on our data?”

Valid question. Let’s compare the two approaches honestly (spoiler: both have their place).

Fine-Tuning: Teaching the AI New Skills

What it is: You take a base model (like GPT-4) and continue training it on your specific data.

Best for:

- Teaching consistent style, tone, or format

- Domain-specific terminology and jargon

- Stable knowledge that doesn’t change often

- Tasks requiring deep reasoning in your domain

The costs:

- Time: Days to weeks for training and testing

- Updates: Must retrain from scratch when data changes

- Expertise: Requires ML engineers and infrastructure

Example use case: A law firm fine-tunes a model to write in their specific legal style, use their preferred citations format, and apply their firm’s analytical framework. The style is stable; it’s worth the investment.

RAG: Giving the AI Access to Information

What it is: The AI searches your documents in real-time and answers based on what it finds.

Best for:

- Rapidly changing information (news, prices, policies)

- Multiple evolving knowledge bases

- Need for source citations and transparency

- Quick deployment and iteration

- Factual accuracy over stylistic consistency

The costs:

- Time: Hours to days for initial deployment

- Updates: Add new documents instantly (minutes)

- Expertise: Developers can implement with existing tools

Example use case: A tech company uses RAG for their internal knowledge base. When policies update (which happens monthly), they just add the new document. No retraining needed.

The Comparison Table

| Factor | Fine-Tuning | RAG | Hybrid |

|---|---|---|---|

| Initial Setup | Weeks | Days | Weeks |

| Upfront Cost($) | $5K-$50K+ | $500-$5K | $10K-$60K+ |

| When Data Changes | Retrain (days/weeks) | Update docs (minutes) | Retrain + Update |

| Response Speed | Fast | Slightly slower | Fast |

| Source Citations | Not possible | Built-in | Built-in |

| Best Use Case | Stable style/reasoning | Dynamic knowledge | Best of both |

RAG or Fine-Tuning? Decision Framework

RAG or Fine-Tuning? Decision Framework

The Hybrid Approach: Best of Both Worlds

For high-stakes applications, many organizations use both:

Fine-tune for: Style, tone, reasoning patterns, domain expertise

RAG for: Current facts, company data, evolving knowledge

Example: A healthcare AI that’s fine-tuned to think like an experienced doctor (diagnostic reasoning, bedside manner) but uses RAG to access the latest treatment guidelines, drug interactions, and research findings.

The catch: Increased complexity. You need the infrastructure and expertise for both. Only worth it when accuracy and performance are mission-critical.

The Knowledge Currency Problem: A Real Scenario

Let’s see why RAG matters with a concrete scenario (this happens more often than you’d think):

Monday: Your company launches a new pricing tier. The marketing team updates the pricing page. Sales gets the new deck.

Tuesday: Customer asks your AI chatbot: “How much does the Enterprise plan cost?”

With a fine-tuned model:

- It was trained on data from two months ago

- It gives the old pricing

- Customer is confused

- Sales team has to correct it

- Trust in AI system erodes

- You need to retrain the model (days of work)

With RAG:

- Marketing added the new pricing doc yesterday

- System retrieves current pricing information

- AI answers with correct, current prices

- Includes link to pricing page for verification

- Customer is happy

- Zero additional work needed

This isn’t a hypothetical. This exact scenario plays out hundreds of times a day across companies using AI for customer interaction.

The bottom line: If your information changes more often than you can realistically retrain models, RAG isn’t optional, it’s essential.

The Limitations You Should Know About

I’d be lying if I said RAG is perfect. Let’s talk about the real challenges (because nobody else will):

Challenge #1: Garbage In, Garbage Out

RAG is only as good as what it retrieves. If your documents are:

- Poorly organized

- Outdated (even if recently updated)

- Contradictory

- Missing key information

Then RAG will retrieve poor information and generate poor answers. The old software principle applies: garbage in, garbage out.

The fix: You need reasonable document quality and organization. The good news? You probably needed this anyway.

Challenge #2: It’s Not Magic

RAG doesn’t make the underlying LLM smarter. It gives it better information to work with. If the LLM struggles with complex reasoning, RAG won’t fix that—it will just give it better context to reason about.

Challenge #3: Retrieval Isn’t Perfect

Sometimes the system retrieves:

- The wrong chunks (information that seems relevant but isn’t)

- Too little context (the answer is split across chunks it didn’t get)

- Too much irrelevant information (diluting the relevant stuff)

Recent research finding: Google’s research shows that insufficient context can actually make things worse. Models went from 10.2% incorrect answers with no context to 66.1% incorrect with insufficient context.

The implication: Retrieval quality matters more than you think. (We’ll cover optimization techniques in Part 2.)

Challenge #4: Latency Increases

RAG adds steps to the process:

- Convert question to searchable format

- Search database

- Retrieve relevant chunks

- Send larger prompt to LLM (including retrieved context)

- Generate response

For most applications (knowledge bases, research tools, customer support), adding 100-500ms is fine. For real-time chat where every millisecond counts, it matters.

Challenge #5: Costs Scale Differently

Every RAG query includes:

- Embedding generation (converting question to searchable format)

- Database search

- Larger prompts to the LLM (because you’re including retrieved context)

At high volume, this adds up. One engineer calculated RAG pushed their average prompt from 15 tokens to 500+ tokens. At millions of queries per month, token costs matter.

The counterpoint: Fine-tuning has its own scaling costs:multiple model versions, constant retraining, GPU expenses. You need to do the math for your specific situation.

Is RAG Right for You?

Here’s when you should seriously consider RAG (and when you shouldn’t):

✅ You should use RAG if:

- Your organization has valuable knowledge scattered across documents

- Information changes frequently (weekly, monthly, even quarterly)

- You need to cite sources and maintain transparency

- You’re building support tools, research systems, or knowledge bases

- Quick deployment and iteration matter

- Accuracy on factual information is critical

❌ You should think twice if:

- Your knowledge is completely static (and will remain so)

- Your documents are in complete chaos (fix that first)

🤔 You should experiment to find out if:

- You’re not sure how often your information changes

- You’re balancing accuracy, latency, and cost constraints

- You’re in a hybrid situation (some stable knowledge, some dynamic)

- You want to understand the cost-benefit for your specific case

What’s Next in This Series

We’ve covered the fundamentals: what RAG is, why it matters, when to use it, and real-world applications.

Coming in Part 2: Advanced RAG Techniques

- Hypothetical Document Embeddings (HyDE)

- Query decomposition and multi-step retrieval

- Reranking for better results

- Self-RAG and agentic approaches

- GraphRAG for complex relationships

- Security and access control

- Optimization strategies that actually move the needle

Coming in Part 3: Building Your First RAG System

- Step-by-step implementation with code

- Choosing embeddings, vector databases, and LLMs

- Chunking strategies that work

- Measuring and improving retrieval quality

- Production deployment considerations

- Cost optimization techniques

- Real debugging scenarios and solutions

The Bottom Line

By 2025, RAG has moved from experimental to essential for organizations serious about AI. Enterprise AI applications turned to RAG to boost accuracy and maintain current responses without the cost and complexity of constant retraining.

The question isn’t whether RAG is useful but whether you can implement it strategically or wish you had started sooner.

Here’s what I recommend right now:

If you’re exploring AI for your organization:

- Identify your top 3 knowledge management pain points

- Calculate what outdated or inaccessible information costs you

- Start with one focused use case

- Pilot RAG before committing to expensive fine-tuning (it can be your quick win!)

If you’re already using AI:

- Audit where your current systems hallucinate or provide outdated info

- Identify which knowledge bases change frequently

- Test RAG on one problematic area

See you in Part 2, where we dive deep into making RAG work even better.

Got questions about RAG for your specific use case? Want to share your experiences? Let’s connect on Twitter or LinkedIn.

If this helped you understand RAG, share it with someone who’s struggling with AI hallucinations or outdated model knowledge. They’ll thank you later. (maybe me as well!)

References

- Google’s Bard Error Costs $100 Billion - NPR, February 2023

- Bard’s Factual Error About JWST - CNN, February 2023

- Market Impact of Bard Error - Fortune, February 2023

- RAG Reduces Diagnostic Errors by 25-33% - MakeBot AI, 2024

- Hospital Network RAG Implementation Results - ProjectPro, 2024

- When Not to Trust Language Models: Insufficient Context - Google Research, November 2023

- Retrieval-Augmented Generation in Healthcare: Comprehensive Review - MDPI, September 2025